Alpine Image

Introduction

The Alpine page on Dockerhub describes itself like this:

Alpine Linux is a Linux distribution built around musl libc and BusyBox. The image is only 5 MB in size and has access to a package repository that is much more complete than other BusyBox based images. This makes Alpine Linux a great image base for utilities and even production applications. Read more about Alpine Linux here and you can see how their mantra fits in right at home with Docker images. -- Alpine

What that means for a developer is that you are running your code on top of a slim and secure Linux base image. By using something this minimal, you can reduce your vunerability footprint and also reduce the time to launch in container orchestrators such as Elastic Container Service (ECS) and AppRunner.

Sample Solution

A template for this pattern can be found under the ./templates directory in the GitHub repo. You can use the template to get started with your own project. The Dockerfile included might be all you are after, and that's OK. But you can also use the sample API and the Dockerfile to experiment with different values. There are also some release configuration settings in the Cargo.toml that will be discussed further down in the article. Those settings enhance the final binary generated by Cargo.

Let's get started walking through this article.

Rust Code

The API that we are building for this example is super basic. It has two endpoints that respond on GET / and GET /health. Below is the main.rs that includes the entirity of the project.

Cargo.toml

There was mention above about some settings in the Cargo.toml file that improved the binary size when building in release mode. Cargo supports profiles so you can have settings for development and then settings for release (among others).

Let's have a look at the Cargo.toml file:

profile devhighlights an opt-level of 0. This tells the compiler to use no advanced optimziations.profile releasehighlights an opt-level of 3 which is the max for optimizations. It also includes strip=true which will remove debug and info symbols.

Dockerfile

Now for the part that brings it all together. This isn't going to be a deep-dive on how to use Docker or construct Dockerfiles, but there are a few general tips below that should relate to other languages and frameworks.

A few points as we get started.

- The Dockerfile makes use of Build Arguments which provide some override capabilities when running

docker buildand such from the command line. - The next thing you'll find is that the file makes use of multi-stage builds. This allows certain layers to contain more components needed for things like compilation, while the final runtime image will be from something super slim like

alpine:latest. - Docker makes use of layers (which is beyond this article) and by combining commands into a single line, layers can be saved.

The Build

The image that is used to start the build process is a combination of the RUST_VERSION and the rust alpine image. Next, add in openssl and libc-dev.

Something that might appear a bit odd is the work that happens here. What's going on is that we are caching the crate dependencies by doing an early build of a basic main.rs. This will cache the pulling of the crates so that this timely operation doesn't happen for every build. Only when things change.

Final Packaging

With the build produced and the binary sitting in the target directory, it's time to setup the final image.

There are a few more build arguments that are created so that they can be overridden if needed and to save on typing mistakes by making them variables.

Lastly, the files are copied from the build and the binary is places in the CMD statement so that it is executed when the container is launched.

# Build arg for controlling the Rust versionARG RUST_VERSION=1.77# Base image that is the builder that originates from AlpineFROM rust:${RUST_VERSION}-alpine as builder# adding in SSL and libc-dev as required to compile with Tokio# and other crates includedRUN apk add pkgconfig openssl-dev libc-devWORKDIR /usr/src/app# Trick Docker and Rust to cache dependencies so taht future runs of Docker build# will happen much quicker as long as crates in the Cargo.tom and lock file don't change.# When they change, it'll force a refreshCOPY Cargo.toml Cargo.lock ./RUN mkdir ./src && echo 'fn main() {}' > ./src/main.rsRUN cargo build --release# Replace with the real src of the projectRUN rm -rf ./srcCOPY ./src ./src# break the Cargo cacheRUN touch ./src/main.rs# Build the project# Note that in the Cargo.toml file there is a release profile that optimizes# this buildRUN cargo build --release# Build final layer from the base alpine imageFROM alpine:latest# Build arguments to allow overrides# APP_USER: user that runs the binary# APP_GROUP: the group for the new user# EXPOSED_PORT: the port that the container is exposingARG APP_USER=rust_userARG APP_GROUP=rust_groupARG EXPOSED_PORT=8080ARG APP=/usr/app# Add the uer, group and make directory for the build artifiacts# Performing as one continuous statement to condense layersRUN apk update \ && apk add openssl ca-certificates \ && addgroup -S ${APP_GROUP} \ && adduser -S ${APP_USER} -G ${APP_GROUP} \ && mkdir -p ${APP}EXPOSE $EXPOSED_PORTCOPY --from=builder /usr/src/app/target/release/web_app ${APP}/web_appRUN chown -R $APP_USER:$APP_GROUP ${APP}USER $APP_USERWORKDIR ${APP}CMD ["./web_app"]

A few points as we get started.

- The Dockerfile makes use of Build Arguments which provide some override capabilities when running

docker buildand such from the command line. - The next thing you'll find is that the file makes use of multi-stage builds. This allows certain layers to contain more components needed for things like compilation, while the final runtime image will be from something super slim like

alpine:latest. - Docker makes use of layers (which is beyond this article) and by combining commands into a single line, layers can be saved.

The Build

The image that is used to start the build process is a combination of the RUST_VERSION and the rust alpine image. Next, add in openssl and libc-dev.

Something that might appear a bit odd is the work that happens here. What's going on is that we are caching the crate dependencies by doing an early build of a basic main.rs. This will cache the pulling of the crates so that this timely operation doesn't happen for every build. Only when things change.

Final Packaging

With the build produced and the binary sitting in the target directory, it's time to setup the final image.

There are a few more build arguments that are created so that they can be overridden if needed and to save on typing mistakes by making them variables.

Lastly, the files are copied from the build and the binary is places in the CMD statement so that it is executed when the container is launched.

# Build arg for controlling the Rust versionARG RUST_VERSION=1.77# Base image that is the builder that originates from AlpineFROM rust:${RUST_VERSION}-alpine as builder# adding in SSL and libc-dev as required to compile with Tokio# and other crates includedRUN apk add pkgconfig openssl-dev libc-devWORKDIR /usr/src/app# Trick Docker and Rust to cache dependencies so taht future runs of Docker build# will happen much quicker as long as crates in the Cargo.tom and lock file don't change.# When they change, it'll force a refreshCOPY Cargo.toml Cargo.lock ./RUN mkdir ./src && echo 'fn main() {}' > ./src/main.rsRUN cargo build --release# Replace with the real src of the projectRUN rm -rf ./srcCOPY ./src ./src# break the Cargo cacheRUN touch ./src/main.rs# Build the project# Note that in the Cargo.toml file there is a release profile that optimizes# this buildRUN cargo build --release# Build final layer from the base alpine imageFROM alpine:latest# Build arguments to allow overrides# APP_USER: user that runs the binary# APP_GROUP: the group for the new user# EXPOSED_PORT: the port that the container is exposingARG APP_USER=rust_userARG APP_GROUP=rust_groupARG EXPOSED_PORT=8080ARG APP=/usr/app# Add the uer, group and make directory for the build artifiacts# Performing as one continuous statement to condense layersRUN apk update \ && apk add openssl ca-certificates \ && addgroup -S ${APP_GROUP} \ && adduser -S ${APP_USER} -G ${APP_GROUP} \ && mkdir -p ${APP}EXPOSE $EXPOSED_PORTCOPY --from=builder /usr/src/app/target/release/web_app ${APP}/web_appRUN chown -R $APP_USER:$APP_GROUP ${APP}USER $APP_USERWORKDIR ${APP}CMD ["./web_app"]

Testing the Solution

Launching and testing the Dockerfile is easy. Run this command first from the template directory root:

docker build -t rust-service .

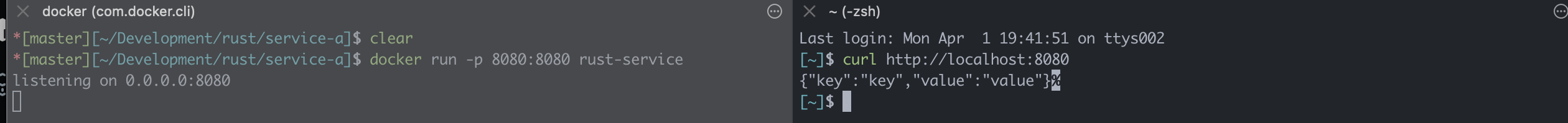

From there, you can launch the container and run a cURL command like shown in this image.

docker run -p 8080:8080 rust-servicecurl http://localhost:8080/

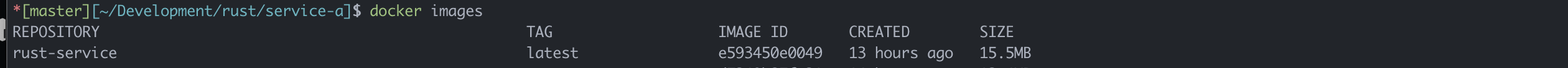

Comment on Size

As has been stated many times on this site, Rust provides amazing performance benefits when pairing with serverless. And this example of building with Alpine looks at another example of how peformance might not always mean just "compute" time.

Since Rust binaries are compiled, they require no runtime like Node.js, Python, .NET or Java. And no runtime means that the base Linux image can be as small as possible when hosting Rust binaries. But why does that matter? Simple. If your code is running in a container that needs to scale out, the size of the image matters. Every byte you don't need is a wasted network byte. So while a .NET image might be 100 - 200MB, a Rust image like this built upon Alpine can be less than 20MB. That could be as much as 80 - 90% reduction in size when at scale can 100% make the difference between when to launch new instances in your fleet and how fast they start.

This image demonstrates the final output from this article.

Congratulations

And that's it! You know have a pattern for building and packaging a simple Rust-based API with a Linux Alpine-based image in a Docker.